Midjourney's New Model: A Deep Dive into Image Generation Evolution

Midjourney's Leap Forward: A New Era of AI Art is Here

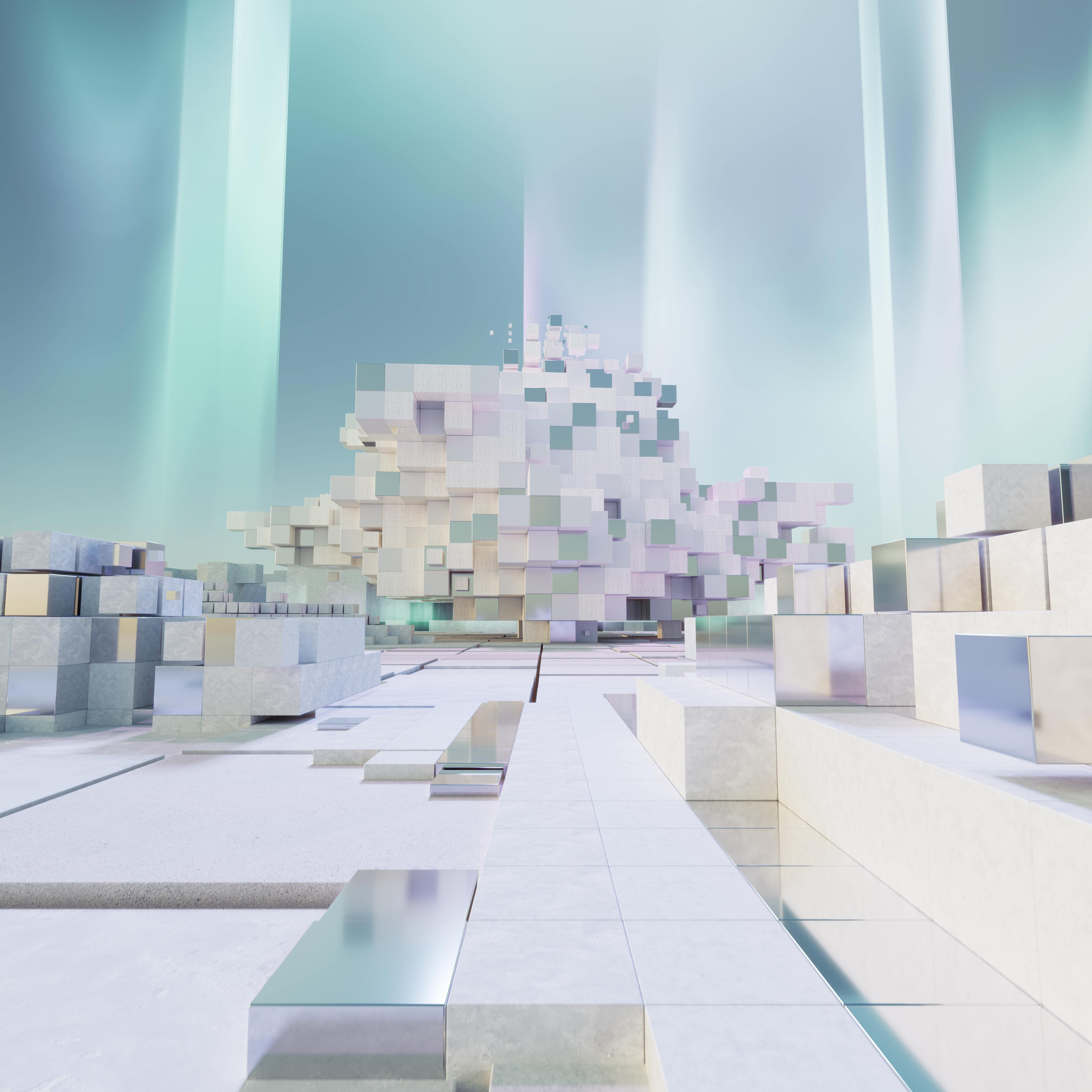

For over a year, the Midjourney community has been eagerly awaiting the next evolution of its image generation capabilities. We've seen incredible artistry bloom from the platform, transforming text prompts into breathtaking visuals. Now, the wait is over. Midjourney has unleashed its newest model into public alpha, and the implications are significant. This isn't just a minor update; it's a fundamental shift in how the AI interprets and renders your creative visions. Prepare to dive deep into the nuances of this exciting new development.

What's New? Unpacking the Key Features

The core of this update is, of course, the new image generation model itself. While the exact technical specifications remain somewhat shrouded in mystery (as is the Midjourney way!), early adopters and keen observers are already uncovering fascinating details. Let's break down the key highlights:

1. Public Alpha and the Promise of Iteration

The fact that this model is in a public alpha phase is crucial. It means that Midjourney is actively soliciting feedback from its user base, continuously refining and improving the model based on real-world usage. This collaborative approach allows for faster iteration and a more tailored experience for creators. We can expect regular updates and improvements in the coming weeks and months, responding directly to the evolving needs of artists and enthusiasts.

2. Personalization: Your Style, Your Way

The most exciting feature, and perhaps the most transformative, is the default enablement of personalization. This means the AI is designed to learn and adapt to your specific artistic preferences from the get-go. No more starting from a blank slate! This fundamentally changes the creative workflow. Instead of wrestling with generic outputs, you can now build upon your existing style, fostering a more cohesive and personalized aesthetic across your generated images. Think of it as having a digital muse that understands your artistic voice.

Consider this scenario: You've been using Midjourney for a while, focusing on creating fantastical landscapes with a specific color palette and brushstroke-like texture. With the new model, the AI will inherently begin to incorporate those elements into future generations, even without explicitly prompting for them. This level of personalization dramatically reduces the iterative process, saving time and effort, and allowing for a more fluid and expressive creative process.

3. Enhanced Image Quality and Detail

While Midjourney hasn't released specific technical details, early reports suggest improvements in image quality and detail. Users are reporting sharper results, with more nuanced textures and a greater ability to handle complex subjects. This is especially noticeable in areas like facial features, intricate patterns, and the rendering of realistic materials. Imagine the difference this could make for creating photorealistic portraits or highly detailed concept art.

4. Improved Prompt Interpretation

One of the biggest challenges with any AI image generator is accurately interpreting text prompts. The new model seems to have made significant strides in this area. It's better at understanding complex prompts, handling nuanced descriptions, and translating abstract concepts into visual forms. This means less time spent tweaking prompts and more time spent exploring your creative ideas.

Putting the New Model to the Test: Case Studies and Examples

The true test of any new technology is how it performs in the real world. Let's look at some examples and case studies to illustrate the capabilities of the new Midjourney model:

- Case Study 1: The Portrait Artist. A digital artist who specializes in creating stylized portraits can now leverage the personalization feature to streamline their workflow. Previously, they might have spent hours refining prompts to achieve a consistent style. With the new model, the AI learns their preferences, generating portraits that align with their artistic vision from the initial generation.

- Example 1: The Sci-Fi Concept Artist. Consider a concept artist designing alien landscapes. With the older models, they might have struggled to create consistent environments. The new model, with its improved detail and prompt interpretation, allows them to generate highly detailed and coherent alien worlds with greater ease. The artist can describe a specific planet, its atmosphere, and its unique flora, and the AI can render it with impressive accuracy.

- Case Study 2: The Fashion Designer. A fashion designer using AI to create mood boards and concept sketches can now generate more accurate and visually stunning representations of their ideas. The personalization feature allows the AI to learn the designer's preferred styles, silhouettes, and fabric textures, creating a cohesive collection of designs that reflect their artistic vision.

- Example 2: The Abstract Artist. An abstract artist, who often incorporates specific color palettes and patterns, can benefit greatly from the personalization feature. The AI will learn their preferred color combinations and abstract styles, allowing them to create a more consistent series of abstract artworks.

These are just a few examples, and the possibilities are truly limitless. The key takeaway is that the new model empowers creators to work faster, achieve more consistent results, and explore their artistic visions with greater freedom.

Actionable Takeaways: How to Make the Most of the New Model

So, how can you get started and make the most of this new Midjourney model? Here are some actionable steps:

- Embrace Experimentation. The public alpha is a playground for experimentation. Don't be afraid to try new prompts, explore different artistic styles, and push the boundaries of what's possible.

- Provide Detailed Feedback. As an alpha tester, your feedback is invaluable. Be specific about what you like, what you don't like, and what could be improved. The more detailed your feedback, the better the model will become.

- Refine Your Prompts. Even with improved prompt interpretation, clear and concise prompts are still essential. Experiment with different keywords, phrases, and modifiers to achieve the desired results.

- Leverage Personalization. This is the key differentiator. Use the model consistently and let it learn your style. The more you use it, the more personalized your outputs will become. Start with prompts that reflect your existing artistic preferences.

- Stay Informed. Keep an eye on the Midjourney community for updates, tutorials, and tips. The community is a valuable resource for learning about the latest features and techniques.

Conclusion: A Glimpse into the Future of AI Art

Midjourney's new image generation model marks a significant step forward in the evolution of AI art. The public alpha, combined with the power of personalization, promises a more intuitive, creative, and personalized experience for artists of all levels. While the exact details of the model are still being revealed, the early results are incredibly promising. This update isn't just about generating images; it's about empowering creators to shape their own artistic journeys. As the model continues to evolve and refine, we can expect even more exciting developments in the months and years to come. The future of AI art is bright, and Midjourney is leading the way.

This post was published as part of my automated content series.